Multi-container setups

What if we want to use a more powerful database engine, one that requires a database server running in the background? SQLite is fine for small applications, but let’s upgrade our app’s database to use Postgres.

The big selling point of using Docker Compose is that it lets you manage multiple containers at once. Although complex software could be composed by using just one container with lots of components running on the inside, splitting each part of your architecture into a separate container comes with a few benefits.

As I mentioned above, it’s nice to keep your dependencies separate. If your web server needs to upgrade its language or OS dependencies, you don’t want the nasty surprise of finding out your database server is incompatible with the change. If two components of your architecture rely on different versions of a Python library, tossing them in different containers means you don’t have to figure out any language-specific mechanisms for running the software in different environments. You may even have software that prefers different operating systems, and you won’t run into trouble.

We can update our docker-compose.yml to load in a Postgres image as well. Although we could create our own Dockerfile that constructs an image with Postgres installed, there’s already an official Postgres Docker image that we can run directly. In our docker-compose.yml, instead of adding a build: section (which tells Docker Compose where to find a Dockerfile to construct an image from scratch), we can use image: to load the existing image from Docker Hub.

version: '3.8'

services:

web:

build: ./web-app

ports:

- '3000:3000'

volumes:

- './sqlite-data:/data'

db:

image: postgres:16.0

If we run docker compose up, Docker Compose will pull the Postgres image I linked and start it in a container called “db”. When the container starts, though, it immediately quits with an error message from Postgres.

$ docker compose up

[+] Running 14/14

✔ db 13 layers [⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿] 0B/0B Pulled

✔ 578acb154839 Pull complete

... snip ...

✔ fd463ca121fb Pull complete

[+] Running 2/2

✔ Container docker-express-example-db-1 Created

✔ Container docker-express-example-web-1 Running

Attaching to docker-express-example-db-1, docker-express-example-web-1

docker-express-example-db-1 | Error: Database is uninitialized and superuser password is not specified.

docker-express-example-db-1 | You must specify POSTGRES_PASSWORD to a non-empty value for the

docker-express-example-db-1 | superuser. For example, "-e POSTGRES_PASSWORD=password" on "docker run".

docker-express-example-db-1 |

docker-express-example-db-1 | You may also use "POSTGRES_HOST_AUTH_METHOD=trust" to allow all

docker-express-example-db-1 | connections without a password. This is *not* recommended.

docker-express-example-db-1 |

docker-express-example-db-1 | See PostgreSQL documentation about "trust":

docker-express-example-db-1 | https://www.postgresql.org/docs/current/auth-trust.html

docker-express-example-db-1 exited with code 1

The Postgres image is expecting to be handed an environment variable with details about the database credentials we want it to use. The image’s Docker Hub page offers some more detailed documentation about which environment variables are used by the image. Let’s set just a POSTGRES_PASSWORD, using the docker-compose.yml syntax to set runtime environment variables. The POSTGRES_USER and POSTGRES_DB variables will default to postgres, according to the documentation.

version: '3.8'

services:

web:

build: ./web-app

ports:

- '3000:3000'

volumes:

- './sqlite-data:/data'

db:

image: postgres:15.0

environment:

POSTGRES_PASSWORD: my_pg_password

Now, the Postgres container will set the database access password to the value we’ve written here, so that other containers wishing to connect will also need to know this password. We’ll improve this password later, though the Postgres database image isn’t actually binding externally to any ports right now (since Docker is managing its port mapping), so it’s not world-accessible.

If we rerun docker compose up, we’ll get a lot more log information from Postgres, including a line that looks like this:

docker-express-example-db-1 | PostgreSQL init process complete; ready for start up.

You’ll notice that Docker Compose shows us logged lines from every container that has been started, if we didn’t use --detach. If we did detach, we can still use docker compose logs, which now shows all the logs from containers specified in the current Compose file, or docker compose logs [container] (where [container] is web or db) to see container-specific logs.

We really do have two distinct containers running now. We can bash our way into the db container and see that, unlike our web container, there’s no /app or /data folder.

$ docker compose exec db bash

root@8dafcf46f0f3:/# ls

bin boot dev docker-entrypoint-initdb.d etc home lib lib32 lib64 libx32 media mnt opt proc root run sbin srv sys tmp usr var

The Postgres image offers an environment variable to choose where to store database data. We can choose, for example, /pg-data:

version: '3.8'

services:

web:

build: ./web-app

ports:

- '3000:3000'

volumes:

- './sqlite-data:/data'

db:

image: postgres:15.0

environment:

POSTGRES_PASSWORD: my_pg_password

PGDATA: /pg-data

If we run docker compose up and wait for the database to become ready, we can peer into the container from another terminal:

$ docker compose exec db bash

root@e39c627300cc:/# ls

bin boot dev docker-entrypoint-initdb.d etc home lib lib64 media mnt opt pg-data proc root run sbin srv sys tmp usr var

root@e39c627300cc:/# ls /pg-data/

base pg_commit_ts pg_hba.conf pg_logical pg_notify pg_serial pg_stat pg_subtrans pg_twophase pg_wal postgresql.auto.conf postmaster.opts

global pg_dynshmem pg_ident.conf pg_multixact pg_replslot pg_snapshots pg_stat_tmp pg_tblspc PG_VERSION pg_xact postgresql.conf postmaster.pid

root@e39c627300cc:/#

Now, we can play around with the database. Let’s put some data in the postgres database using the psql tool.

$ docker compose exec db psql -U postgres postgres -c "CREATE TABLE my_table (name TEXT);"

$ docker compose exec db psql -U postgres postgres -c "INSERT INTO my_table (name) VALUES ('Tim');"

$ docker compose exec db psql -U postgres postgres -c "SELECT * FROM my_table;"

name

------

Tim

(1 row)

Remember that container filesystems are ephemeral, though! Let’s stop our containers (with Ctrl+C or docker compose down if you’re detached) and upgrade our database from Postgres version 15.0 to 16.0:

version: '3.8'

services:

web:

build: ./web-app

ports:

- '3000:3000'

volumes:

- './sqlite-data:/data'

db:

image: postgres:16.0

environment:

POSTGRES_PASSWORD: my_pg_password

PGDATA: /pg-data

Now, bring the containers up again with docker compose up. Running our SELECT command again shows that our database has been cleared:

$ docker compose exec db psql -U postgres postgres -c "SELECT * FROM my_table;"

ERROR: relation "my_table" does not exist

LINE 1: SELECT * FROM my_table;

As before, we should make sure we’re not storing anything important inside container filesystems. We can use a bind mount in our docker-compose.yml to make sure Postgres’s database data is persisting on the host filesystem:

version: '3.8'

services:

web:

build: ./web-app

ports:

- '3000:3000'

volumes:

- './sqlite-data:/data'

db:

image: postgres:16.0

environment:

POSTGRES_PASSWORD: my_pg_password

PGDATA: /pg-data

volumes:

- './pg-data:/pg-data'

The Postgres image actually configures a Docker volume at the default Postgres data directory, so if you don’t change the PGDATA environment variable, data will indeed persist across rebuilds of the container. If you’re comfortable using Docker volumes, this is fine, but it’s still wise to be explicit in your docker-compose.yml about which volumes you’re using.

Docker’s documentation recommends using volumes over bind mounts. Still, I tend to recommend bind mounts for casual users because of their improved visibility on the host system.

Now, when we bring up our containers, Postgres will initialize again, this time into our host’s working directory’s pg-data directory. You may find that these database files have permissions that make them hard to access without sudo on your host. That’s because Postgres is, by default, writing those files using a different Linux user account than the one you’re logged into.

Let’s connect the web application to our Postgres server. After installing the Postgres library (npm install --save pg), we update our server code:

import express from 'express';

import pg from 'pg';

const pool = new pg.Pool({

host: 'db',

user: 'postgres',

database: 'postgres',

password: process.env.POSTGRES_PASSWORD,

});

const initDb = async () => {

const client = await pool.connect();

await client.query(`

CREATE TABLE IF NOT EXISTS page_views (

id SERIAL PRIMARY KEY,

time TIMESTAMP NOT NULL DEFAULT CURRENT_TIMESTAMP,

path TEXT

)

`);

client.release();

};

await initDb();

const app = express();

app.get('/', async (req, res) => {

await pool.query('INSERT INTO page_views (path) VALUES ($1)', [req.path]);

const { rows } = await pool.query('SELECT COUNT(*) AS count FROM page_views');

const pageViewCount = rows[0].count;

res.status(200).send(`Hello! You are <strong>viewer number ${pageViewCount}</strong>.`);

});

app.listen(3000, '0.0.0.0', () => {

console.log('Server listening');

});

const pool = new pg.Pool({

host: 'db',

user: 'postgres',

database: 'postgres',

password: process.env.POSTGRES_PASSWORD,

});

This is pretty similar to the SQLite3 code. The important change is toward the top, in how we connect to the Postgres server. I’ve hardcoded the user and database fields. password is coming from the environment, which we’ll need to configure in our docker-compose.yml.

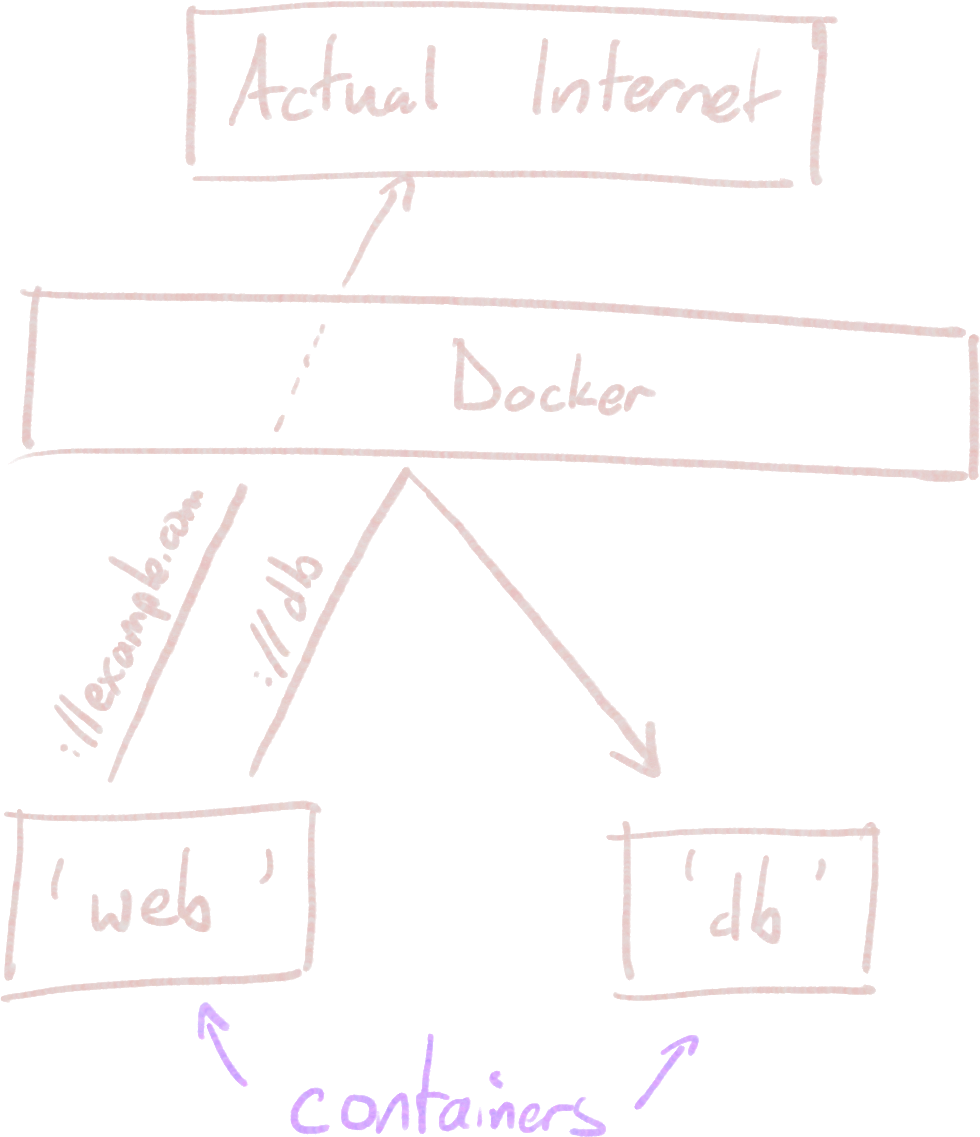

The host field is interesting; we’re using db here, which is the name of the container in our docker-compose.yml. By default, Docker Compose will link all of our containers from a single docker-compose.yml file together in one shared network. Even though we haven’t added any host-to-container port mappings in the docker-compose.yml for our db entry, containers that are part of this configuration are allowed to see each other. The hostname is like any other hostname or domain name, when seen from within the container. For example, if we were to enter the db container and run curl http://web:3000/ (assuming curl was installed), we would see the page served by our web server, even though this URL doesn’t do anything on the host machine.

We’ll need to make a few changes to docker-compose.yml:

version: '3.8'

services:

web:

build: ./web-app

ports:

- '3000:3000'

environment:

POSTGRES_PASSWORD: my_pg_password

restart: unless_stopped

depends_on:

- db

db:

image: postgres:16.0

environment:

POSTGRES_PASSWORD: my_pg_password

PGDATA: /pg-data

volumes:

- './pg-data:/pg-data'

restart: unless_stopped

I’ve removed the volume mount for the web service, since we’re not using SQLite3 anymore. I also added the password environment variable to the web service, which is why the Node script is able to see process.env.POSTGRES_PASSWORD (where process.env is just how you access environment variables in Node); environment variables are set per-container in docker-compose.yml.

I threw in restart: unless_stopped, which will restart our containers if they crash or if the host machine reboots; this is especially useful on servers that might undergo maintenance from time to time. We can still stop the containers manually, if we want.

Finally, I’ve added a depends_on directive to the web container to make sure web won’t start until db has. This isn’t always necessary, especially if your web server doesn’t initiate connections before the first request, or if web is configured to retry the connection a few times. It’s possible for the db server not to be ready to accept connections for a moment after it has started, so our web server may crash and start working only after the automatic restart we just configured; there are ways to solve this, but I’ll skip them here. I often omit depends_on entirely for toy projects, but it’s good practice to codify your container dependencies somehow (and to keep them acyclic, if you can!).

Now, we can give it a docker compose up --build to make sure the new version of the web server is loaded.

It works! The view counter was reset, since we never made any attempt to migrate the SQLite database to Postgres, but the new version will persist. Restarting the server or upgrading Postgres shouldn’t pose a problem.